Personalized healthcare is better healthcare

Patients deserve care that meets them where they are and accounts for their motivations, environment, preferences, and beliefs. Simple measures, such as calling a patient at a better time, asking which channel of communication best suits them, and helping them with non-clinical issues all go a long way in improving a patient’s health.

Personalizing healthcare offers significant improvements in patient satisfaction, length of hospital stays, and 30-day readmission rates, in addition to a 5-10% reduction in administrative costs and a 20% to 25% increase in quality standards simply by incorporating holistic patient data to fit custom-fit engagements around the patient [1]. Accommodating patient preferences – for example, by allowing the patient to choose the type of intervention used in their care – is associated with fewer treatment dropouts and more positive treatment outcomes [2]. Evidence demonstrates that when care is tailored to individual needs and preferences, patients are more likely to actively participate in their healthcare journey with [3]:

-

Increased activation and motivation to follow treatment plans

-

Improved medication adherence and health literacy

-

Stronger bonds between them and healthcare providers

A strong provider relationship improves the willingness of patients to work on any health need [4]. Unfortunately, only a small fraction of patient healthcare experiences are personalized [5]. Evidence indicates minorities and low-income individuals are twice as likely to report that their care preferences are never considered [6].

Personalized interactions improve engagement

Some of the most important information about a patient’s life journey is never captured in electronic health records. Indeed, a patient’s trust and confidence in their care providers comes not just from the clinician’s medical knowledge, but from their ability to understand the patient as a human being. To bring more of this humanity to our AI agents, we’ve developed a safe way to create continuity across patient interactions. When speaking with a patient, our AI agents are able to incorporate key non-EHR data from prior calls into the conversation. The result is a deeper, more personalized patient-AI agent interaction:

The feature, which we call “Patient Continuum,” increased the amount of time patients spent speaking with our AI agents by 74.1% across 16,046 patient calls.

Listen to Patient Continuum in Action

Sample 1:

Patient: “Well, I’m sure if I exercise more, it would help the … back problems of you know, like arthritis. I mean, just degenerative issues are becoming more pronounced.

…

I would hope that I would be able to, you know, involve more movement in my daily activities. Feel like in terms of lightweight exercise and that kind of thing

…

Well, number one, I would love it if we had a pool readily available. We don’t. Well, we have one in the motel, but it is a little too cold … it causes some problems making myself move. I think if it were a little bit warmer you know, I don’t want a hot tub. But, you know, just at a warmer ambient temperature.

In this example, we check in on the daily health goal the patient mentioned, and follow up on their preference for a warm water pool for daily exercise:

Agent: “It can be tough to deal with those changes. I recall you mentioned wanting to get more movement into your daily activities to help with mobility. Have you been able to make any progress with that?”

…

Agent: “I totally get that. Warmer weather can definitely make it easier to get moving. You mentioned before that you thought exercising in a warmer pool would be really helpful for your mobility. Is that something you’re still interested in trying?”

(Hippocratic AI login is required to listen)

Sample 2

Patient: Exercising a lot and watching what I’m allergic to. That way, I have a good night’s sleep. My stomach is resting. I have a good night’s sleep. And that is what is contributing to good health. Better sleep habits, eating properly, and exercising more than ten thousand steps a day, possibly twelve to Twelve to fifteen thousand a day.”

…

Patient: “I was looking at my eight one see. And my A1C went from six point one to point seven. So I figure if I really step it up a couple of thousand steps, I think I could get it down.

Here, the agent proactively checks in about what the patient’s working on daily, and encourages their behavior by contextualizing it in the progress the patient is excited about

Agent: “Okay. You mentioned last time that exercising a lot and watching what you’re allergic to has been helping your health. Have you been able to keep up with those habits?”

Patient: “Just, discipline, exercise. And one of the things that’s motivating me is by looking at my A1C scores.“

Agent: “That’s really great to hear. I remember you mentioned your A1C level had decreased from 6.1 to 5.7, and that’s fantastic progress. How did it feel to see those results?”

(Hippocratic AI login is required to listen)

Personalizing interactions while protecting patient privacy

Personalizing interactions by referencing prior interactions poses a privacy challenge. Training the model on any prior interaction risks disclosing personal information out of context, or to the wrong person. To overcome this challenge, we developed a technique to retrieve relevant information from a Memory Store on-demand during our conversation with the patient. The Memory Store is a secure HIPAA-compliant database used to store salient non-EHR information about a patient’s personal care journey. Data in the Memory Store is never used for model training, but can be used by the AI for a more personalized interaction.

Patient Continuum Context Alignment:

Without special optimization, Large Language Models (LLMs) are not good at contextualizing patient understanding and surfacing relevant portions of prior interactions in a meaningful way, even when provided the right information. Specifically, unoptimized LLMs either fail to make use of context from past conversations or do so in a way that can come across as disingenuous, awkward, or even manipulative.

To improve this behavior, we created synthetic data by having U.S. registered nurses create simulated patient conversations. Our system then autonomously selected key pieces from those conversations with relevant contextualization. We used reinforcement learning to align the model. Consider the examples below to see the contrast.

Context: the patient’s main motivation for improving their health is to live long enough to see their daughter walk down the aisle, which could be a few years from now.

Unaligned LLM:

Agent: “Michael, I understand you don’t like using your blood pressure cuff. However, I want to remind you that you told me your goal is surviving to attend your daughter’s wedding in a few years. I think to meet that goal, it’s essential to routinely monitor your blood pressure.”

Patient Continuum-aligned LLM:

Agent: “I get it, and I know you’re in a tough spot Michael. On one hand, the blood pressure cuff is annoying, but on the other hand, you want to improve your health – you have so much to look forward to, and you want to be there for your family! How do you think about this tradeoff?”

Context: the patient previously spoke about their passion for their church community, and has been leading the efforts to plan their upcoming annual party in a few months.

Unaligned LLM:

Agent: “I remember when we talked 4 weeks ago you told me that you were working on your church community’s annual party. How is that going?”

Patient Continuum-aligned LLM:

Agent: “I’m excited to catch up! How’s the party planning coming along?”

Context: the patient used to be an avid swimmer, and was a D1 swimmer but hasn’t swam in decades since they got really busy.

Unaligned LLM:

Agent: “Let’s brainstorm some ways you could mix in some more exercise into your daily life. How do you feel about running, swimming, or biking as activities to explore?”

Patient Continuum-aligned LLM:

Agent: “I’m glad you asked, have you thought about getting back into swimming? I know it’s been a while, but since it’s familiar, it could be easier to incorporate into your routine”

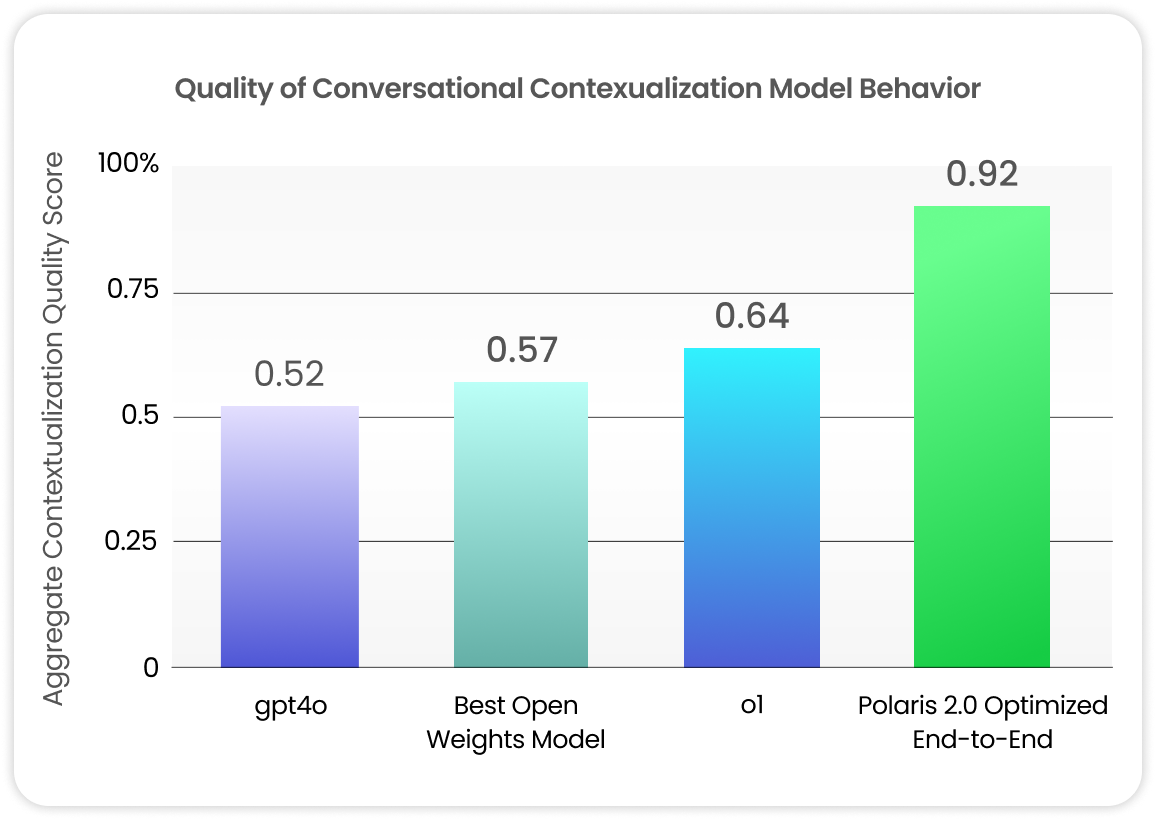

To evaluate our model against other LLMs, we created an automated LLM-based evaluation system. U.S. registered nurses conducted simulated patient conversations with our system. For conversation turns referencing prior conversations, the nurses provided quality scores based on an established set of criteria (for example, penalizing awkwardness while rewarding relevance and conversational smoothness). We used this data to train a LLM to simulate these nurse scores aligned to this framework.

Separately, we leveraged the team of nurses to conduct a separate set of 387 conversations, which we similarly filtered for relevant turns. We then provided OpenAI GPT-4o, Best Open Weights Model, OpenAI o1, and our conversation model with the same inputs. We evaluated the outputs of the four models using our automated evaluation model.

The figure below shows the average quality scores for each model (a higher score is better). Our Patient Continuum-aligned model significantly outperformed the other three models.

References

-

https://www.bcg.com/publications/2022/how-to-develop-healthcare-personalization-capabilities

-

https://onlinelibrary.wiley.com/doi/10.1002/jclp.22680

-

https://jamanetwork.com/journals/jamanetworkopen/fullarticle/2824977

-

https://pmc.ncbi.nlm.nih.gov/articles/PMC9358340/

-

https://www.businesswire.com/news/home/20221004005044/en/81-of-Consumers-Say-a-Good-Patient-Experience-is-Very-Important-When-Interacting-with-Healthcare-Providers

-

https://communitycatalyst.org/wp-content/uploads/2023/09/Person-Centered-Care-Report-Why-it-Matters.pdf